Perceptual Hashes: Difference between revisions

m (formatting, new links) |

(Formatting) |

||

| Line 30: | Line 30: | ||

==Size of the Hash:== | ==Size of the Hash:== | ||

Most of the algorithms I have seen in the literature calculate the hash in an integer | Most of the algorithms I have seen in the literature calculate the hash in an integer. | ||

This limits most of them to 64 bit hashes or smaller! | This limits most of them to 64 bit hashes or smaller! | ||

I 'accumulated' and compared my hashes in HEX strings, so 256bit hashes are easy | I 'accumulated' and compared my hashes in HEX strings, so 256bit hashes are easy. | ||

You need a HEX string to do database searches of your hash anyway! | You need a HEX string to do database searches of your hash anyway! | ||

Hacking bits out of a HEX string for comparison is just a little harder than from integers | Hacking bits out of a HEX string for comparison is just a little harder than from integers. | ||

==How Perceptual Hashes are compared:== | ==How Perceptual Hashes are compared:== | ||

| Line 64: | Line 64: | ||

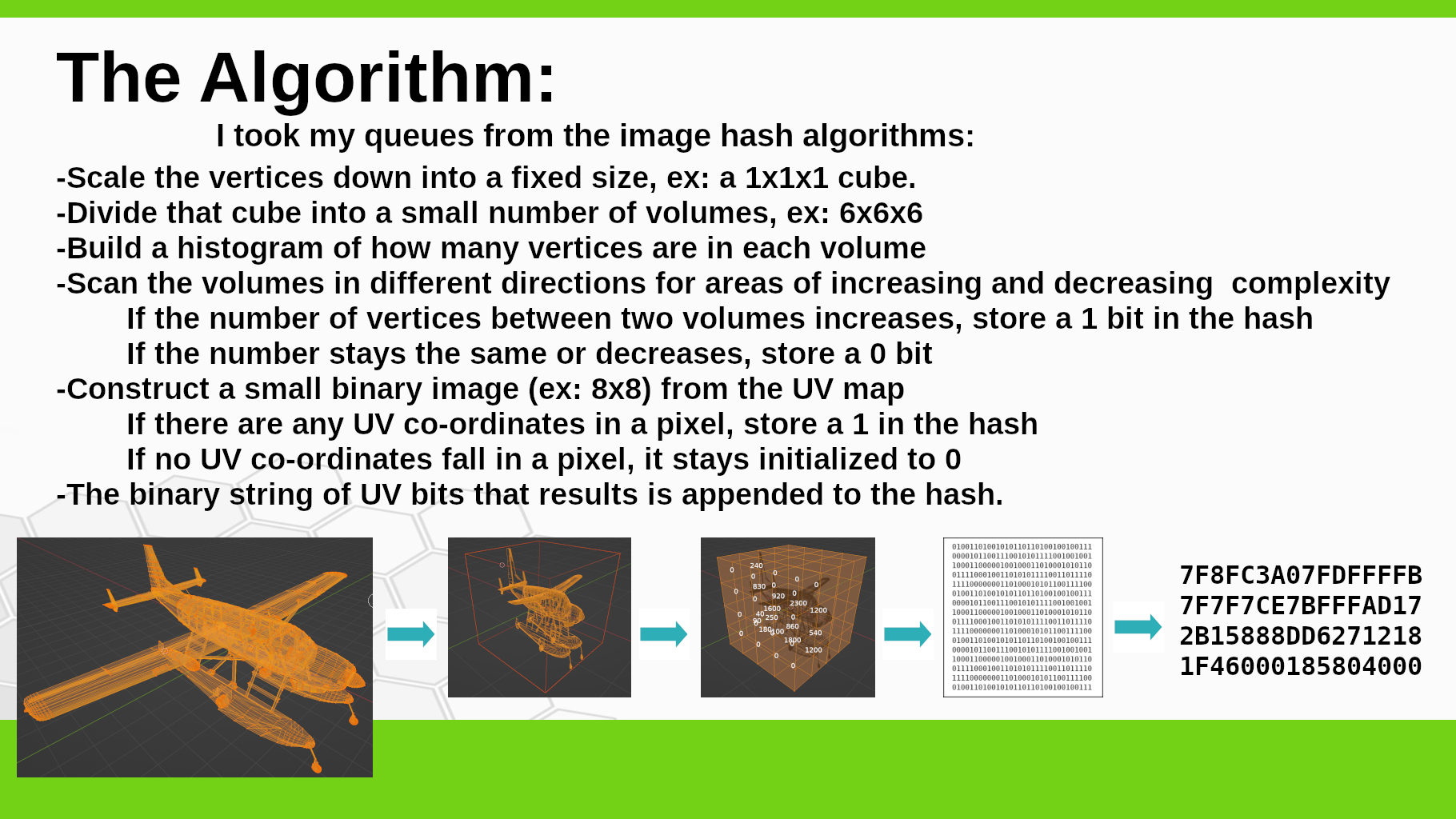

==How to generate a hash from a 3D object:== | ==How to generate a hash from a 3D object:== | ||

[[File:Slide090w.png]] | [[File:Slide090w.png]] | ||

* I took my clues from the image hash algorithms: | |||

* Scale the vertices down into a fixed size, ex: a 1x1x1 cube. | |||

* Divide that cube into a small number of 'voxels', ex: 4x4x4 | |||

* Build a histogram of how many vertices are in each voxel | |||

* Scan the voxels in different directions for areas of increasing and decreasing complexity | |||

** If the number of vertices in a voxel increases, store a 1 bit in the hash | |||

** If the number stays the same or decreases, store a 0 bit | |||

* Construct a small binary image (ex: 8x8) from the UV map | |||

** If there are any UV co-ordinates in a pixel, store a 1 in that location. | |||

** If no UV co-ordinates fall in a pixel, it stays initialized to 0 | |||

** The binary string of UV bits that results is appended to the hash. | |||

So what I end up with is a string of bits with two sections: each bit in the vertex section relates to the complexity at a given location, each bit in the UV section relates to a location in the textures. The Hamming distance can be used on both sections of this hash, it can be treated as one big string of bits and the same code used to calculate similarity in images can be used. | So what I end up with is a string of bits with two sections: each bit in the vertex section relates to the complexity at a given location, each bit in the UV section relates to a location in the textures. The Hamming distance can be used on both sections of this hash, it can be treated as one big string of bits and the same code used to calculate similarity in images can be used. | ||

Revision as of 05:03, 3 June 2023

Perceptual Hashes for Copyright Protection and Copybot Detection

Perceptual hashes can be used to tell you how similar two files are to each other, without having to store either file. This has been used to do super-fast image searches (tineye.com). It can also be used to detect copyright violations by detecting slightly modified versions of 'borrowed' images.

I'll describe briefly how, for images, perceptual hashes are generated and compared. Then I'll briefly describe an algorithm I have come up with for generating perceptual hashes of 3D models. Since objects in-world are made out of mostly 3D models and images, these two hash algorithms can be used to detect many copy-botted objects, even ones that have been modified slightly to hide the theft.

I have two proposed uses for these algorithms:

- Detecting copy-botted items as they are uploaded into a grid, and

- Building a "patent office" where creators can register hashes of their objects and use this as evidence later to prove that their copyrights have been infringed.

The code for calculating these hashes will be released to the public domain. The WEB page and Grid are open for you to try out and test for me!

A hash is a mathematical algorithm that converts a large digital object into small fixed length string of bits.

You can't use crypto hashes for comparing images:

- Changing one pixel in an image completely changes the SHA256 hash.

- Just loading a JPG image and saving it again can change the crypto hash.

- Scaling an image or changing any colors changes the crypto hash.

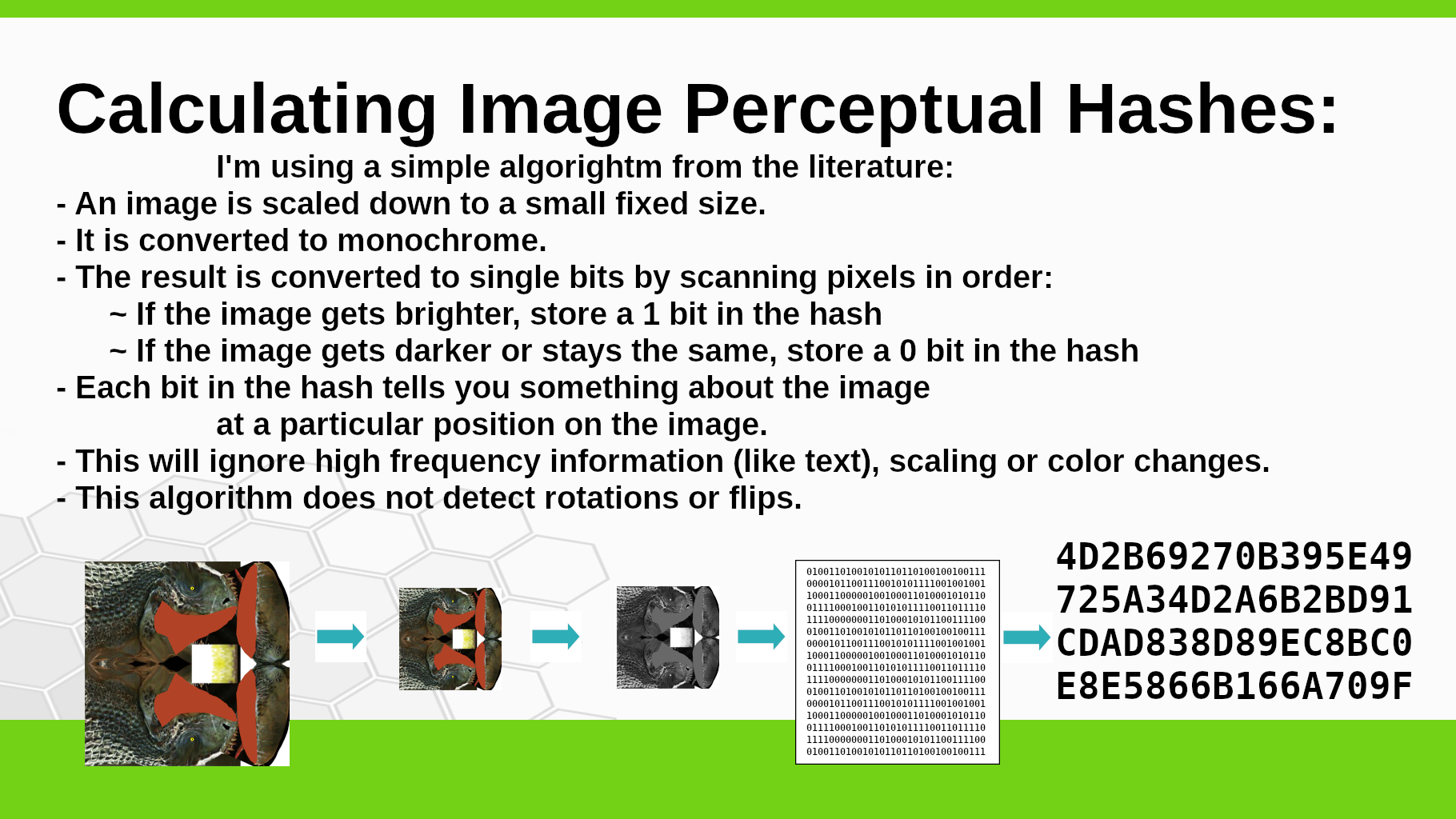

How Perceptual Hashes for Images are Calculated:

- The image is scaled down to a very small size (typically 8x8 pixels!).

- It is converted to black and white.

- The black and white image is reduced into single bits to make a hash.

Scaling filters out a lot of high-frequency data, like a single pixel change or some text added to the image. You could just threshold the image. You could do complex frequency analysis, like a wavelet transform on the image. An in-between approach is to scan the image in different directions for places where the image gets brighter (convert to a 1 bit), stays the same or gets darker (convert to a 0 bit). I read in the literature that this works well, and it is easy to calculate.

- This turns out to always produce an all 0 hash for all flat images!

- But this is not an image that most people would try to copyright.

Size of the Hash:

Most of the algorithms I have seen in the literature calculate the hash in an integer. This limits most of them to 64 bit hashes or smaller! I 'accumulated' and compared my hashes in HEX strings, so 256bit hashes are easy. You need a HEX string to do database searches of your hash anyway! Hacking bits out of a HEX string for comparison is just a little harder than from integers.

How Perceptual Hashes are compared:

Each bit in a perceptual hash is on or off based on some localized feature of the image. So two similar images should have the same on and off bits in many locations.

Each bit in a perceptual hash is on or off based on some localized feature of the image. So two similar images should have the same on and off bits in many locations.

The Hamming Distance is a simple algorithm to calculate how many bits are different between two hashes.

You can calculate the Hamming distance of two BIGINTs in MySQL, which would work for 64 bit hashes.

MySQL 8.0 has added the ability to do bit calculations on BLOBs, which would allow calculations on larger hashes.

In earlier versions of MySQL you can store your larger hashes as multiple columns of BIGINTs and still to reasonably fast searches by combining those columns in a single calculation.

What Parts of a 3D Object can be used to make a hash?

- A 3D object is stored as several different components, the important ones are:

- Several different images. I know how to hash those already!

- Several different mesh prims, (I ignore OpenSim 'system' prims).

- Each prim has a list of vertices

- A list of normals

- A list of quadrangles, often simplified to triangles

- Table of UV map locations

- The order of the vertices is not important, only their locations matter. I chose to analyze those.

- The normals are less important to the geometry, I chose to ignore those for now.

- The table of quadrangles can change drastically depending on the order of the vertices. Ignored.

- The UV map of an object is an important part of a 3D object, I do want to analyze this

How to generate a hash from a 3D object:

- I took my clues from the image hash algorithms:

- Scale the vertices down into a fixed size, ex: a 1x1x1 cube.

- Divide that cube into a small number of 'voxels', ex: 4x4x4

- Build a histogram of how many vertices are in each voxel

- Scan the voxels in different directions for areas of increasing and decreasing complexity

- If the number of vertices in a voxel increases, store a 1 bit in the hash

- If the number stays the same or decreases, store a 0 bit

- Construct a small binary image (ex: 8x8) from the UV map

- If there are any UV co-ordinates in a pixel, store a 1 in that location.

- If no UV co-ordinates fall in a pixel, it stays initialized to 0

- The binary string of UV bits that results is appended to the hash.

So what I end up with is a string of bits with two sections: each bit in the vertex section relates to the complexity at a given location, each bit in the UV section relates to a location in the textures. The Hamming distance can be used on both sections of this hash, it can be treated as one big string of bits and the same code used to calculate similarity in images can be used.

Preliminary testing:

On a small grid (90K assets) I added a database table and calculated a perceptual hash for each image and mesh. I used this to detect copies of several mesh items of my own. Then I searched for parts of a contested mesh: the Athena mesh body. For hysterical reasons, this body is actually made out of a dozen separate mesh parts (pre BoM), so I searched for just one of those. I found many identical copies, and many copies of an older version that had a Hamming distance of 7.

Use Case 1: OpenSim Copybot Detection:

Every item stored in an OpenSim asset database already has a cryptographic hash (SHA256) stored with it to detect exact duplicates. This is done to prevent storing the same image many times which would waste disk space. If the database also stored perceptual hashes, OpenSim could check for copybotted items. An item that has full-perm permissions but is perceptually similar to an item that should not be full perm is one red flag to look for. What do you do when you detect a suspect item? You could temporarily lock the item from being rezzed until the person who uploaded it provides some proof that they are the owner or have the rights to a full perm copy.

- One way to impliment this would require changes to the OpenSim code.

- In the mean time, a background task on the server could scan for copyboted items.

Use case 2: Patent Office:

I am in the process of creating a database, grid and WEB site where creators can bring items to be 'registered'. You can go try these out now, although it is all very preliminary at the moment.

- The database stores only hashes, not the item data itself.

- You can bring items to the WEB page and register them.

- It currently reads JPG and PNG image filess, DAE 3D object files.

The registration Grid has some helper scripts (written in PHP) to find all the parts of an item and register them for you in the database. (No changes to OpenSim were necessary). This process will find all the images used in a build and all the mesh objects that it is made from. I'd recommend also going to the WEB page and adding a picture of what it looks like.

- You will be able to use the 'patent office' to search and compare suspected copybot objects against the database

- A stand-alone console utility is under development to generate and compare hashes offline.

Trust:

Why would creators want to bring their creations to let my code look at them?

- The database does not store copies of your creations, only hashes.

- The grid does store copies of items brought there, but they will be deleted from the assets.

- I promise I will not steal your intellectual property!

- (Which is exactly what you would expect a thief to say).

- Hey, I'm also a creator! I want to protect my own items.

- (Which is exactly what you would expect a thief to say).

- So I have been looking for a non-profit organization to take control of the github, WEB and Grid.

- Selby Evans says that the NMA (New Media Arts) is interested in doing this.

- Then creators would have more confidence that it is safe to scan their items.

- The sources of all the code involved is Open Source.

What's next?

- I want to write code for the WEB page to read more and newer 3D file formats, like FBX, KTX and GLTF

- I want to convert the hash algorithms into other languages. Want to help?

- I want to calculate hashes for other file types. I'd be particularly interested in scripts! There are lots of articles about detecting plagiarism in text files, that might work. But I have not had time to research this and see if there is a perceptual hash approach.

Where to go?

- 'Patent Office' WEB page: MVRAgency.org

- HyperGrid URI of the 'Office': MVRAgency.org:8002

Github (sources to the code) https://github.com/Virtual-World-Systems/Development/tree/main/Collaborator/KayakerMagic

Try out https://tineye.com to search the WEB with perceptual hashes.

A couple of references I started from:

- https://en.wikipedia.org/wiki/Perceptual_hashing

- https://jenssegers.com/perceptual-image-hashes

- https://phash.org

Definition

A perceptual hash differs from a cryptographic hash in that it varies only slightly with slight differences in source files, whereas a cryptographic hash produces a completely different output number even if a single bit of the source file is changed. This allows for a measurement of the amount of difference between files in order to determine how similar one piece of content is with another, ergo how derivative it might be, what parts might have been copied from another work that might be copyrighted.